Natural language is an imperfect vehicle for meaning. On the one hand, some expressions can be interpreted in multiple ways; on the other hand, there are often many superficially divergent ways to express very similar meanings. Semantic representations attempt to disentangle these two effects by exposing similarities and differences in how a word or sentence is interpreted. Such representations, and algorithms for working with them, constitute a major research area in natural language processing.

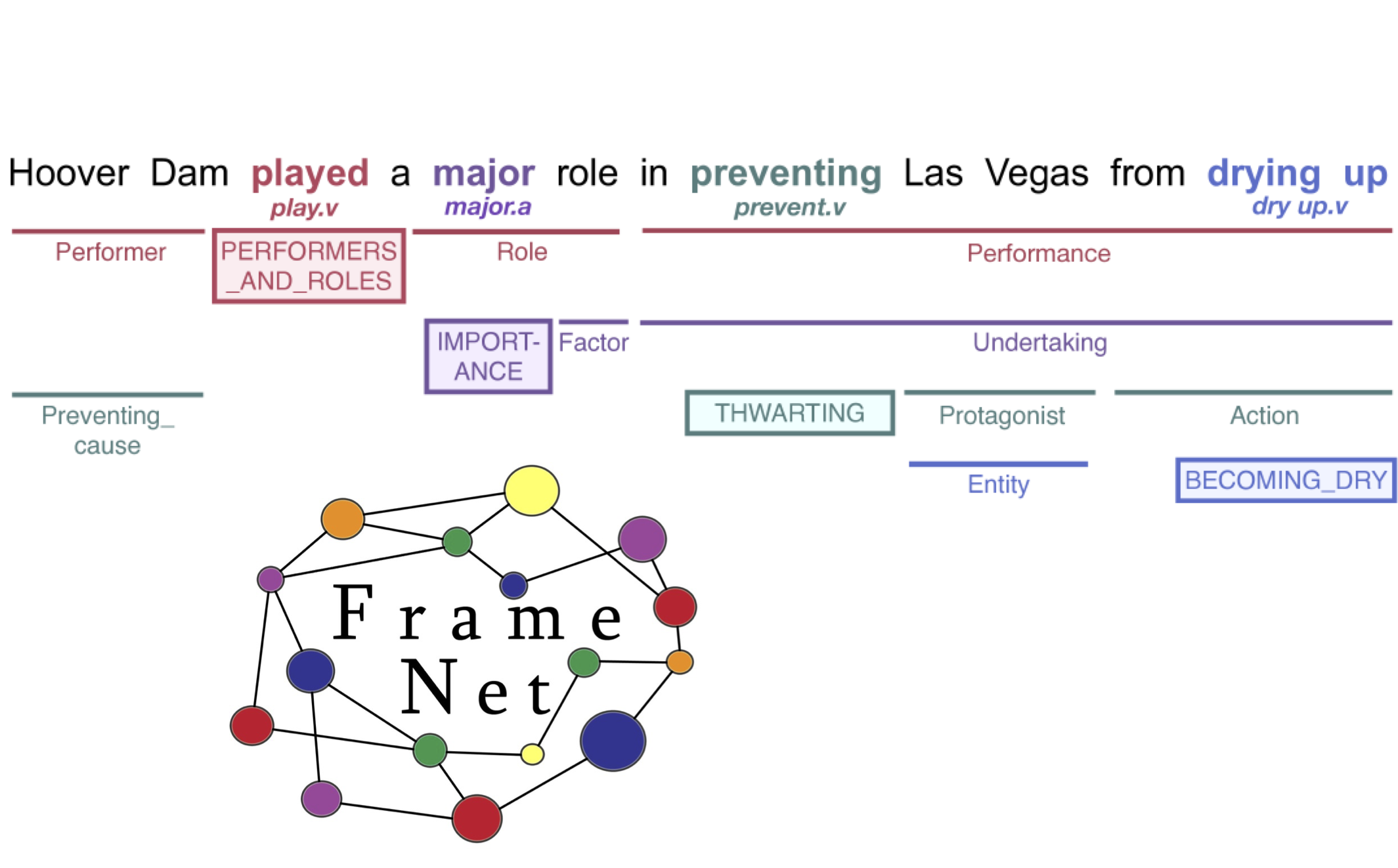

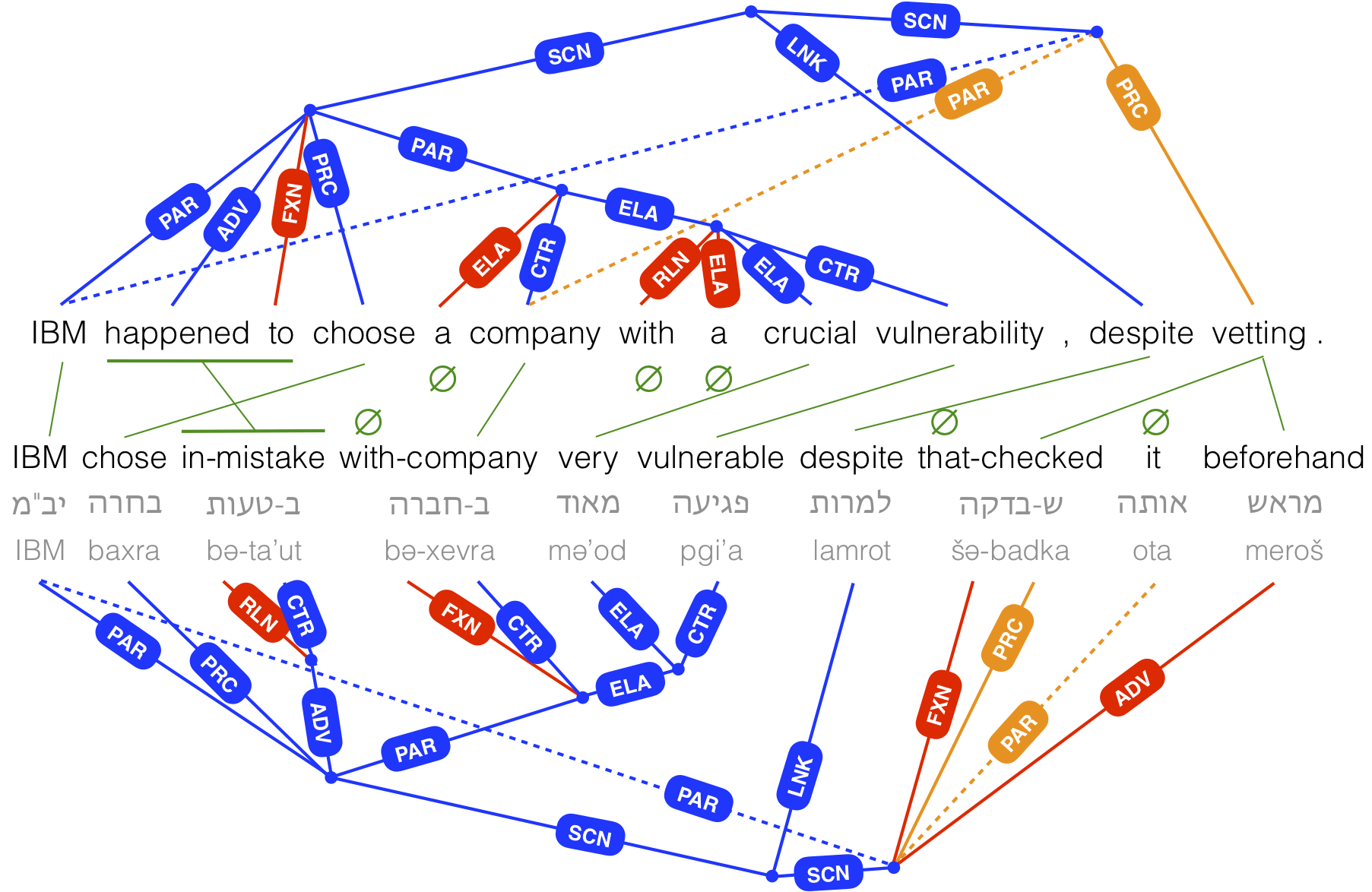

This course will examine semantic representations for natural language from a computational/NLP perspective. Through readings, presentations, discussions, and hands-on exercises, we will put a semantic representation under the microscope to assess its strengths and weaknesses. For each representation we will confront questions such as: What aspects of meaning are and are not captured? How well does the representation scale to the large vocabulary of a language? What assumptions does it make about grammar? How language-specific is it? In what ways does it facilitate manual annotation and automatic analysis? What datasets and algorithms have been developed for the representation? What has it been used for? Representations covered in depth will include FrameNet (http://framenet.icsi.berkeley.edu), Universal Cognitive Conceptual Annotation (http://www.cs.huji.ac.il/~oabend/ucca.html), and Abstract Meaning Representation (http://amr.isi.edu/). Term projects will consist of (i) innovating on a representation's design, datasets, or analysis algorithms, or (ii) applying it to questions in linguistics or downstream NLP tasks.